GPU Financing Strategies That Work

Dec 12, 2025|Compare GPU financing models: loans, leasing & tokenized credit. Fund AI infrastructure and deploy H100s faster without equity dilution.

The gap between AI ambition and available capital has never been wider. Companies that can deploy GPU infrastructure in weeks rather than months capture market share, while those waiting on traditional bank approvals watch opportunities slip away.

Financing high-performance computing hardware requires understanding models from equipment loans to tokenization-based credit, evaluating total ownership costs against deployment speed, and working with lenders who actually understand GPU economics. This guide breaks down the financing structures AI infrastructure operators use to fund deployments from $5 million to $300 million without diluting equity or waiting months for approval.

What Is GPU Financing?

GPU financing refers to specialized lending arrangements that let AI companies acquire high-performance computing hardware without paying the full cost upfront. Unlike traditional business loans—which can take months and focus heavily on your company's credit history—GPU financing typically uses the processors themselves as collateral, which speeds up approval and opens doors for newer companies. For context, a small AI infrastructure deployment might cost $5 million, while larger operations can run $300 million or more.

The key difference here is that lenders evaluate the GPU hardware itself, not just your balance sheet. They're looking at factors like resale value, the specific chip model, and how you plan to generate revenue from the computing power. This approach works because modern GPUs hold their value relatively well and produce measurable income through compute services.

Here's what sets GPU financing apart:

- Timeline: Specialized lenders can close deals in 7–30 days compared to 60–120 days at traditional banks

- Collateral structure: GPUs get registered under UCC-7 warehouse receipts, creating clear legal ownership records

- Evaluation criteria: Lenders assess hardware specs and market demand rather than just your company's financial history

The result is a financing system built for AI infrastructure, where hardware becomes available in tight windows and deployment speed directly impacts your competitive position.

Key Costs In AI Computing Power

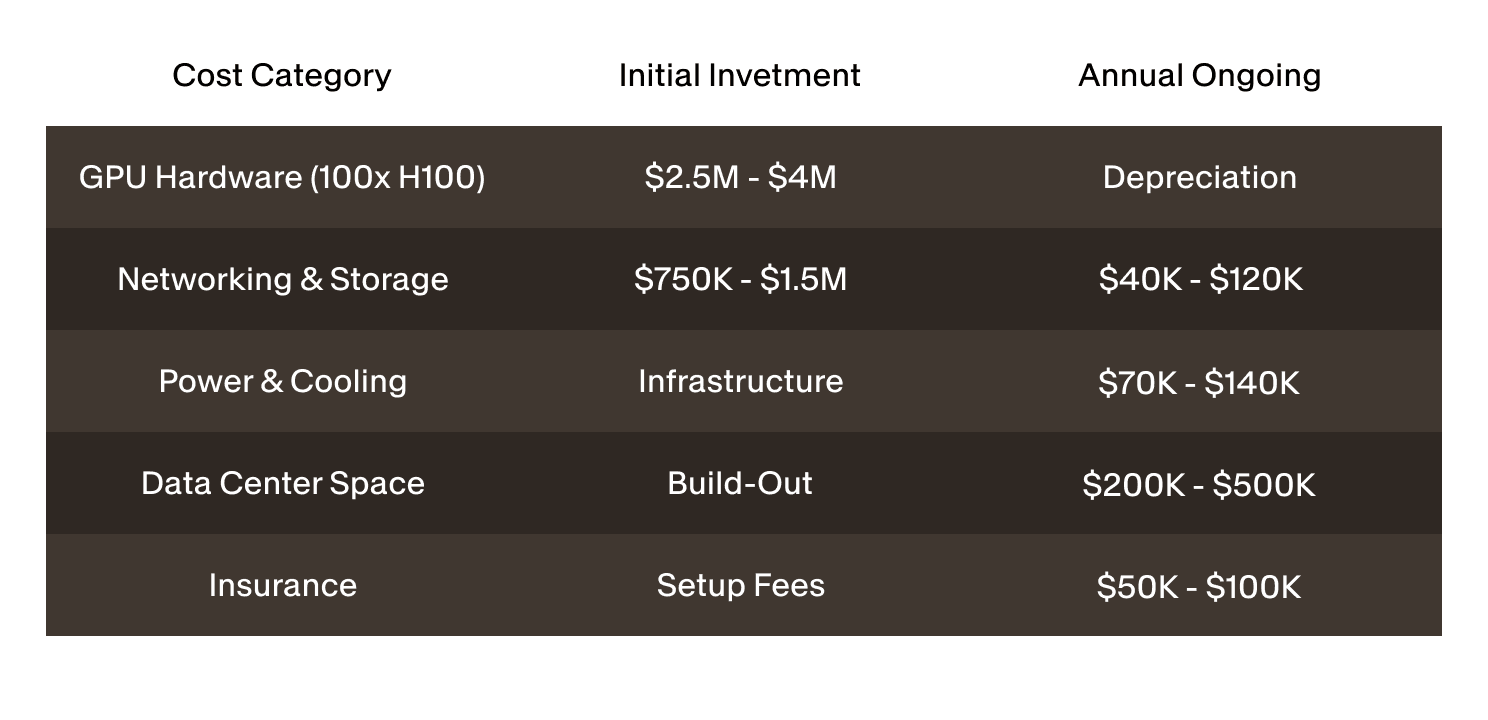

The sticker price on GPUs represents just the starting point for AI infrastructure costs. A single NVIDIA H100 GPU costs between $25,000 and $40,000, but you'll also need networking equipment, power systems, cooling infrastructure, and rack space. These additional components typically add 30–50% to your hardware budget before you can run your first workload.

Power consumption creates one of the largest ongoing expenses. An H100 draws roughly 700 watts when running at full capacity, so a 100-GPU cluster uses 70 kilowatts continuously. Depending on your data center location, that translates to $50,000–$100,000 in annual electricity costs. Cooling systems to handle that heat can add another 20–40% to your power bill.

Different financing models address these cost layers in distinct ways. A standard equipment loan covers your upfront hardware purchase but leaves you responsible for all operational expenses. Leasing arrangements might bundle maintenance into your monthly payments. Newer tokenization-based financing can even create liquidity against future compute revenue.

Top Financing Models For AI GPUs

The right financing structure depends on your timeline, cash flow, and long-term hardware plans. Each model offers different tradeoffs between speed, control, total cost, and flexibility. Let's look at the four main approaches AI infrastructure operators use today.

1. Loans And Equipment Financing

Equipment loans work like traditional debt—a lender provides capital to buy GPUs outright, and you repay over 24–60 months with interest. The hardware serves as collateral, often through non-recourse agreements where the lender's claim is limited to the GPUs themselves rather than your other business assets.

The mechanics typically involve creating a special-purpose vehicle (SPV) that legally owns the hardware. You grant the lender a security interest in those GPUs, documented through UCC-7 electronic warehouse receipts. These receipts can be tokenized on blockchain systems for transparency, though traditional paper documentation works too.

The main advantages include immediate ownership, tax benefits from depreciation, and lower total cost since you're only paying principal and interest. The drawback is that you bear all obsolescence risk—if GPU technology leaps forward, you're holding depreciating assets with an outstanding loan balance. Qualification usually requires either existing compute revenue or solid customer commitments.

2. Leasing And Lease-To-Own

GPU leasing comes in two forms: operating leases and capital leases. Operating leases keep the equipment off your balance sheet—you pay monthly for GPU access over 12–36 months, then return the hardware, renew, or buy it at fair market value. This model works well when you expect rapid technology changes or have variable compute demand.

Capital leases function more like financed purchases. You record the asset on your balance sheet and claim depreciation, but you also carry the liability. The economic reality resembles equipment financing, just packaged differently for accounting purposes.

Lease-to-own arrangements blend both approaches. You start with lower monthly payments but build equity toward eventual ownership. This structure gives you breathing room during early revenue growth while preserving the option to own the hardware long-term. The tradeoff is cost—lessors typically charge 15–30% more than direct financing over equivalent terms because they're pricing in their capital costs and residual value risk.

3. On-Chain And Tokenization-Based Financing

Tokenization-based financing represents the newest approach, using blockchain infrastructure to create transparent representations of GPU assets. You form an SPV that holds GPUs, then issue digital tokens representing fractional ownership or revenue rights in those assets. Lenders buy these tokens in exchange for capital, providing non-dilutive financing secured by the hardware.

The tokens function as UCC-7 electronic warehouse receipts, giving holders a legal security interest in the underlying GPUs. Because these receipts exist on blockchain networks, they're transparent, auditable, and tradable on secondary markets—creating liquidity for lenders and potentially reducing your borrowing costs.

This model offers speed through smart contract automation, transparency since all parties can verify collateral status on-chain, and global access since crypto-native lenders can participate without traditional banking infrastructure. The challenges include regulatory uncertainty in some jurisdictions and the need for reliable hardware custody systems. You'll also need platforms that understand both structured credit and blockchain technology—a relatively small but growing field.

4. Consumption-Based Or Pay-Per-Use

Pay-per-use models eliminate upfront capital requirements by charging only for GPU compute you actually consume. Cloud providers like AWS, Google Cloud, and Azure offer this through on-demand instance pricing, while specialized GPU clouds provide similar access to bare-metal infrastructure. You're renting compute capacity by the hour rather than owning hardware.

This approach makes sense for unpredictable workloads, development environments, or initial proof-of-concept phases before committing to owned infrastructure. You avoid depreciation risk, maintenance responsibilities, and data center complexity.

However, the economics shift dramatically at scale. On-demand GPU pricing typically runs 3–5x higher than the amortized cost of owned hardware over three years. For sustained workloads like continuous model training or high-volume inference, ownership becomes significantly cheaper once you cross utilization thresholds around 40–60%. Some operators use hybrid models—owning baseline capacity for predictable workloads while using cloud resources for peaks.

Evaluating Your Financing Options

Choosing the right financing structure requires analyzing your deployment scenario, cash flow, and strategic priorities. There's no universally best option—only the approach that aligns with your operational reality.

1. Comparing Total Cost Of Ownership

Total cost of ownership extends beyond interest rates to include all expenses over your hardware's productive life. Start by calculating the present value of all cash flows: purchase price or lease payments, interest or financing fees, maintenance costs, insurance, power and cooling, and eventual resale value.

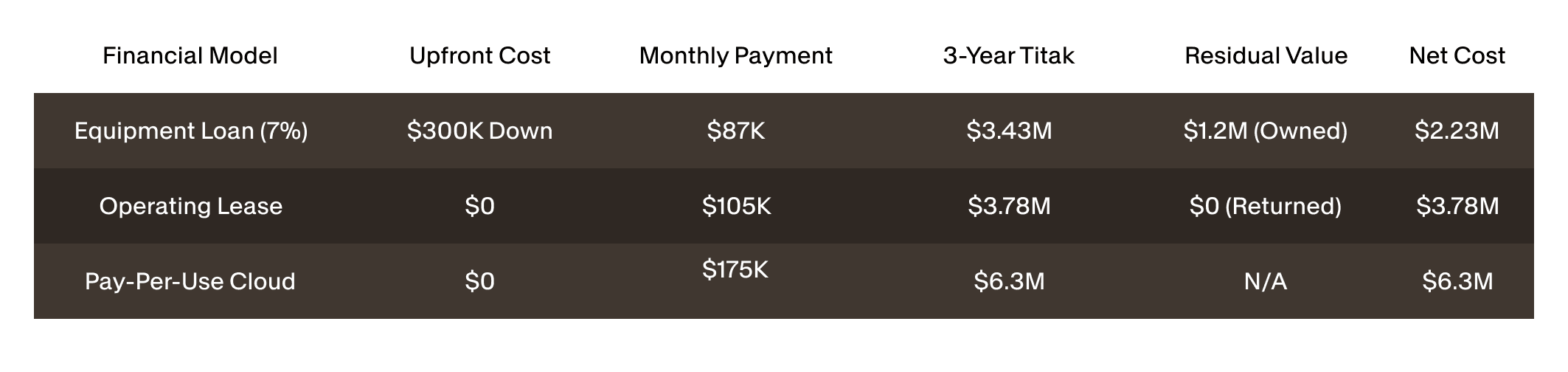

For a direct comparison, model each financing option over identical time horizons—typically 36 or 60 months. Here's a simplified example for a $3 million GPU deployment:

This analysis shows that while leasing eliminates upfront costs, it's 69% more expensive than financed ownership over three years. Cloud computing costs nearly three times more than owned infrastructure for sustained workloads. However, raw numbers don't capture optionality value—the ability to return obsolete hardware, scale quickly, or avoid stranded assets.

Tax implications further complicate the picture. Owned GPUs qualify for accelerated depreciation, potentially including 100% bonus depreciation under current U.S. tax law, significantly reducing effective cost for profitable entities. Lease payments are fully deductible as operating expenses but don't generate depreciation benefits.

2. Balancing Speed Versus Control

Different financing models impose different constraints on operational control. When you own hardware outright—through cash purchase or equipment financing—you control upgrade timing, redeployment decisions, and eventual sale. You can move GPUs between data centers, reconfigure clusters, or sell into secondary markets without lender approval.

Leasing arrangements typically restrict these freedoms. Your lease agreement may specify approved data center locations, limit hardware modifications, or require lender consent for early termination. These constraints rarely matter during normal operations but can become costly if your business pivots.

Key control considerations:

- Physical possession: Owned hardware can be relocated; leased equipment often requires lessor approval

- Maintenance authority: Ownership means you choose service providers and upgrade schedules

- Redeployment flexibility: Your ability to shift hardware between workloads or sell capacity to third parties

Tokenization-based financing offers a middle ground. You maintain operational control through the SPV structure while lenders hold security interests. As long as you meet payment obligations and maintain insurance, you operate with near-owner flexibility despite the debt.

3. Assessing Scalability And Flexibility

Your financing structure can either enable or constrain growth. Look for arrangements that allow incremental capacity additions without renegotiating entire agreements. Some lenders offer accordion features that let you draw additional capital against new GPU purchases under pre-approved terms.

Contract terms around early payoff and refinancing significantly impact strategic flexibility. Equipment loans with prepayment penalties lock you into original terms even if better financing becomes available. Conversely, loans without prepayment penalties let you refinance opportunistically or pay down debt if cash flow improves.

For rapidly scaling operations, financing structures that evaluate individual GPU clusters rather than overall corporate credit become essential. Asset-based lenders who underwrite hardware quality can approve multiple tranches as you commission new capacity, whereas traditional banks typically require annual credit reviews.

Managing Risk And Obsolescence

GPU technology evolves rapidly, with new architectures emerging every 18–24 months that can make current hardware significantly less competitive. NVIDIA's progression from A100 to H100 illustrates this dynamic—each generation delivers substantial performance improvements that compress pricing on previous models.

Obsolescence risk manifests differently across financing structures. Owned hardware exposes you to full depreciation risk but gives flexibility to upgrade on your timeline. Leasing transfers some obsolescence risk to the lessor, who prices this exposure into lease rates. Pay-per-use models eliminate the risk entirely but at premium pricing.

Insurance represents another critical layer. Most lenders require comprehensive coverage including property insurance for fire, theft, and damage, plus liability insurance and sometimes business interruption coverage. Annual premiums typically run 1–2% of hardware value, adding $30,000–$80,000 annually for a $3 million deployment.

Secondary markets for GPU hardware have matured significantly, creating exit options that didn't exist a few years ago. You can often sell current-generation GPUs at 50–70% of original cost after 18–24 months, and even older hardware finds buyers for inference workloads. This residual value cushions obsolescence risk and factors into financing decisions.

Fast-Track Approval And Funding Tips

Speed matters in GPU financing because hardware allocation windows are often narrow—particularly for the latest high-demand chips. Missing your delivery slot can mean months of additional waiting while competitors capture market opportunities.

1. Preparing Documentation And Collateral

Lenders evaluating GPU financing requests assess both the hardware's value and your ability to generate revenue from it. Start by assembling detailed hardware specifications: exact GPU models, quantities, purchase orders or allocation confirmations, and expected delivery dates. Include data center information like location, power capacity, cooling infrastructure, and network connectivity specifications.

Financial documentation demonstrates cash flow sufficiency to service debt. Provide current financial statements, revenue projections tied to GPU deployment, and customer contracts or letters of intent if available. For newer entities without operating history, detailed business plans showing compute pricing assumptions and utilization targets strengthen your application.

2. Working With Specialized Lenders

Traditional banks rarely understand GPU economics—depreciation curves, utilization metrics, or secondary market dynamics. Specialized lenders who focus on AI infrastructure bring sector expertise that translates to faster approvals and more flexible structures.

When evaluating potential financing partners, ask about their GPU lending history: How many deals have they closed? What's their typical loan-to-value ratio? Do they understand tokenization structures or on-chain collateral verification? Lenders who can speak fluently about compute density and OEM allocation dynamics are more likely to move quickly and structure deals intelligently.

3. Timing Your GPU Purchases

OEM allocation systems for high-demand GPUs like H100s operate on quarterly or semi-annual cycles with long lead times. Securing financing before your allocation window opens lets you move immediately when hardware becomes available rather than scrambling for capital after receiving a delivery date.

Pre-approved credit facilities work well for this scenario. You negotiate financing terms in advance, then draw funds as GPUs ship and are verified. This approach eliminates financing uncertainty from your deployment timeline and can strengthen your position with OEMs, who prefer customers with confirmed funding.

Moving Forward With GPU Financing

The financing structure you choose shapes your capital costs, operational flexibility, and competitive positioning. Operators who secure efficient financing can deploy capacity faster and scale more aggressively, while those struggling with capital constraints often find themselves behind the deployment curve.

Start by defining your priorities: Do you value ownership and control above all else? Is preserving working capital essential? How important is upgrade flexibility versus lowest total cost? Your answers will point toward the financing model that fits your situation.

For AI infrastructure operators seeking fast, transparent GPU financing, USD.AI provides specialized asset-backed credit structures designed specifically for compute deployments. Our tokenization-based approach enables 7–30 day execution cycles while maintaining institutional rigor and transparency.

The key is moving decisively once you've identified the right approach. GPU markets move quickly, and financing that arrives too late delivers no value. Capital availability shouldn't become the constraint that limits your growth in AI infrastructure.

FAQs About AI GPU Financing

How does insurance work for GPU financing?

Most lenders require comprehensive property and liability insurance covering the full replacement value of financed GPUs, with the lender named as loss payee. Borrowers typically pay premiums of 1–2% of hardware value annually, and coverage remains current throughout the loan term.

Can I combine multiple financing models for phased AI deployments?

Yes, many operators use hybrid approaches—financing initial capacity through equipment loans while leasing additional GPUs for peak demand, or owning baseline infrastructure while using pay-per-use cloud for overflow. This strategy optimizes capital efficiency across different workload characteristics.

Are there specialized underwriting requirements for data centers?

Lenders typically evaluate power capacity measured in megawatts, cooling infrastructure through PUE ratios, physical security, network connectivity, and facility certifications like SOC 2 or ISO 27001. Tier III or IV data centers generally receive more favorable terms due to reduced operational risk.